Hello navigators of the digital plane!

It has been a long time since my last check-in, but rest assured there has been much movement in the world of formats migration at Harvard. Today I bring you more musings on the integrity of digital images and some of the psychedelic visual processes therein. The theme today is that looks can be deceiving…not unlike one of these!!!

Can you see the hidden image??? Hint: It’s an Elephant

Okay, well maybe the work I’ve been doing is actually quite different, but anyways…

The last month-and-a-half has been devoted to deep analysis of the Photo CD format, a pageant-style selection of potential target formats and tools, and conversion testing and quality assurance between myself and members of the Imaging Services department. Moving into an action phase of a project is always very gratifying after months of planning and preparation, and the importance of carefully and deliberately planning for this phase has fully crystallized for me during this past month. Deciding upon a format’s significant properties (which will be our “wish list” for successfully migrating a format), creating criteria for potential tools and target formats, and creating metrics for judging the success of these conversions requires the involvement of multiple stakeholders who will ensure that nothing is being overlooked. To stage this moment of consensus, it is up to us in Digital Preservation to document our analysis of the format (of files as they exist within the Harvard repository and in technical specifications for the format) and clearly articulate the risks and the options that currently exist for avoiding them. In analyzing the format at large, our definitions of the format’s significant properties were as such:

-A tool that correctly interprets PhotoYCC (luminance and chrominance channels separated) and maps color information to a device-independent color space without clipping available color information is essential. If the output color space is RGB, YCC color information should fit into the color space without clipped levels and with the widest color and grayscale gamut possible.

-Scene Balance Algorithms (SBAs) are different calibration settings for the analog object during the initial scanning process based on the material (type of film stock, slide, object, etc). A conversion tool should be able to account for these adjustments to ensure that highlights are not overblown and the photographic object is understood with accurate chrominance and luminance information.

-Image Pac compression is a very efficient form of mathematically lossless encoding which would store the image at a Base resolution (768 x 512) and would be expanded out to a full resolution image (3076 x 2048) through a PCD-compliant application. Conversion tools will need to be able to unpack Base resolution files to ensure that images are migrated losslessly.

-PCD bit streams are 8 bit-depth allowing for 16 – 24 bits per component, using compression/subsampling for efficient, mathematically-lossless encoding of color information. Ideally a target format should be able to losslessly contain all the same information using an efficient compression algorithm.

-The format contains embedded technical metadata, particularly related to the original scanning process, Scene Balance Algorithm adjustments, and other technical and provenance metadata. If the conversion tool is not able to perform this extraction, other tools should be considered.

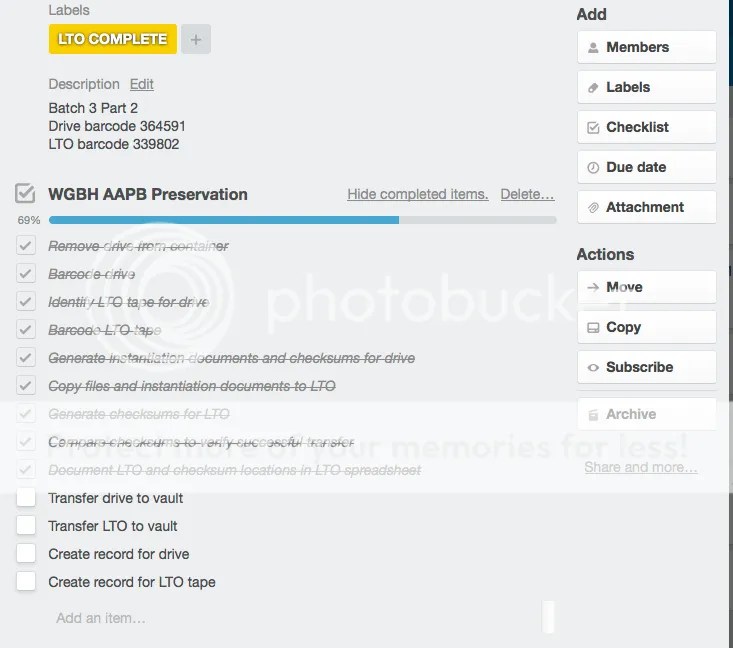

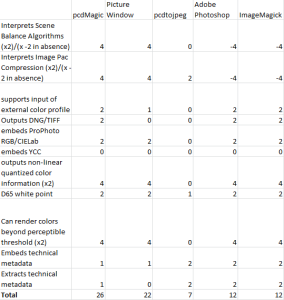

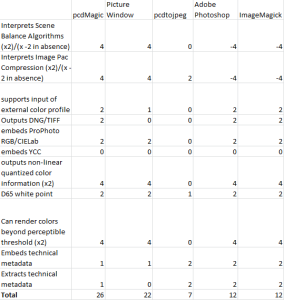

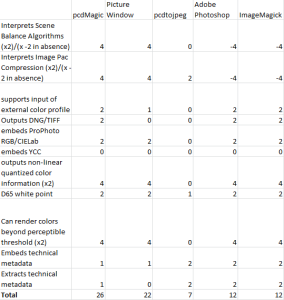

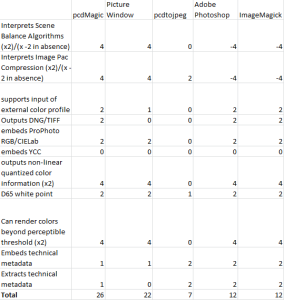

Based on these findings, we were then able to conduct an audit of the available tools and possible target format for the project. Each of these criteria was used to score each tool/format based on its system and format specifications. The score sheet looked like this:

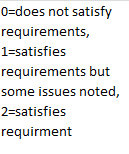

To score the acceptability of each target format and tool, a “2” was assigned when the criteria was met, a “1” if some issues were noted with this particular criteria (e.g. some loss of color information noted despite support of the color space) and a “0” when the criteria was not met. In some instances criteria were also weighted if it was an essential component (e.g. correctly interpreting an Image Pac file is absolutely essential in order for us to declare the conversion a success). If the tool did not meet these essential criteria, a negative value was applied. In addition to this score sheet, a report was created which also noted tools and formats that were left out entirely due to lack of ongoing support.

From this assessment we decided on these factors for creating a test set from the assortment of PCD files in the repository as well as a number of migration scenarios including the tool used, custom settings within the tool, target format and color space outputs, and the means for performing quality assurance (QA) on the results. Our test factors were as such:

Tools

-pcdMagic appears to be our best option. Not only does it understand Image Pac compression and Scene Balance Algorithms, it can output ProPhoto RGB and DNG/TIFF files. It also accepts any number of input color profiles and film terms for balancing luminance and chrominance levels in the image.

-pcdtojpeg and ImageMagick can both extract technical and provenance metadata from original PCD files.

Output

-YCC is in dwindling support in most digital photo formats so we wanted to try mapping to a new color space. Our research found that CIELab and ProPhoto RGB would be acceptable color spaces which would introduce minimal (and possibly zero) loss of information. However, no tools on the market can both interpret PCD and map YCC to CIELab. Thus ProPhoto RGB is our only option

-DNG and TIFF are the best options for a target format and are also possible for export within the pcdMagic tool. One downfall with DNG is that it is a raw photo format and is not always natively understood by photo applications. Additionally, pcdMagic outputs to DNG with a color space that is “similar to” RGB ProPhoto. Indeed, characterization tools such as ImageMagick report a color profile name of “pcdMagic DNG profile” as opposed to TIFF which contains a true ProPhoto RGB profile. The one downfall with TIFF is that it does not incorporate efficient lossless encoding – a 6 MB PCD file would need to be expanded out to a 36 MB file in order to contain the same lossless information. This is not necessarily an opposing factor to our ultimate decision around a target format since this is more of an implication on storage space than of a successful conversion but is nonetheless a consideration.

Settings and Scenarios

-Images from the Harrison D. Horblit Collection of early photography (6628 of the 7243 total PCD files) were scanned in an ISO 3664 compliant environment and photographed with standard Color Negative film stock and scanned by Boston Photo. Additionally, the photos were uncropped with color bars for calibration and upon return from Boston Photo were cropped (removing color bars) and rendered as RGB Production Masters also in PCD format in order to generate JPEG deliverables. Images from the Harvard Daguerreotypes project (438 files) were photographed on 4050 E-6 35mm film stock and applied with corresponding Scene Balance Algorithm settings. These were delivered to Harvard as cropped PCD objects. Images from the Richard R. Ree Collection (177 files) were shot on an unspecified film stock. More exploration is being performed to determine what settings were used during the scanning process.

-Imaging Services maintains their own collection of Kodak film terms that are specific to SBA settings and film stocks used during the scanning process which are not included in the suite of input color profile options in pcdMagic. We added these film terms to the available profiles in order to perform additional tests and QA.

-Images will be exported as both TIFF and DNG for additional QA.

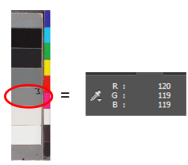

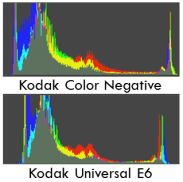

In QA-ing the results in PhotoShop, we made some interesting observations. First of all, for the Horblit Collection and Harvard Daguerreotypes, the added Kodak film terms produced results that were wildly superior to the default settings. However, some differences could be seen in what film terms produced the best results. For the Horblit Collection, the “Kodak Color Negative” setting proved to be the most ideal setting across the board (for 5 or 6 images tested). This is indeed good news since when migrating more than 7,000 images we are going to want to have an accurate sense of how to place images in different buckets and apply conversion settings uniformly within a batch. If conversion of all 6628 images in Horblit is best obtained through the same film term setting, then a process of batch-converting all objects with that film term setting will be much more efficient and less resource-intensive. Additionally, ImageMagick reported an SBA setting of “Unknown Film Type” across the board so this was not necessarily in conflict with any of the embedded metadata. However, for the Harvard Daguerreotypes the ideal choice of film term from image to image is less obvious. Some images appear best with the “Kodak Color Negative” setting while others demonstrate infinitesimally superior results in either “Kodak 4050 E-6” and “Kodak 4060 K-14” settings. To make matters more complicated, ImageMagick reports SBA settings of “Kodak Universal E-6” film stock which never appears to be the best choice within pcdMagic.

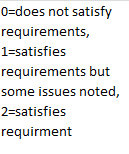

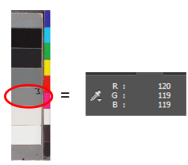

But how do we gauge success of the transfer anyways? PhotoShop is a great application for QA since it presents pre-rendered histograms of TIFF objects and converts Raw formats (such as DNG) to RGB TIFF for comparison. It also can read RGB channels at any point in the image which is especially useful for images with the color bars. When dragging the color sampler across middle gray on the color bars, the RGB channels should be balanced. If the hue or saturation is off, then the channels will not be as equally balanced. This is an example of acceptably balanced gray-levels:

In the absence of color bars, the histogram readings are our best friend (well, they always will be even in the presence of color bars). An image that displays a histogram without a clipped-levels warning and with a wide and dynamic histogram waveform means that the conversion was a success. In comparing these two readings for one example, we can see that the Kodak Color Negative results in a wider, more ideal color gamut. In instances where the histograms demonstrate similar results, converting the image to grayscale can help to demonstrate which image has a better representation of luminance levels.

These examples demonstrate the importance of using acceptability metrics that go beyond what is seen by the naked eye. It is worth mentioning that I am mildly color blind so it would be difficult for me to judge which image shows more cyan tones than another. Here is an example of where the eye fails to show what is actually present. These two images using different color profiles seem pretty much the same, right? Well, in using PhotoShop’s subtract feature, we see that they actually differ quite significantly in how they are interpreting color as is demonstrated by the ghost image that remains.

But what happens when we subtract a TIFF image from a DNG image with the same output settings? Interestingly enough, the subtraction produced no ghost image. Also, the histograms are almost exactly identical (with no differences in gamut but very slight differences in standard deviation readings…I’ll spare you the details on that). We feel confident after comparing our results that the TIFF and DNG produce basically identical results.

Lastly, what about the Richard R. Ree images? These images are unfortunately even less clear. Though the images demonstrate a wider color gamut with the Kodak film terms, some images appear more washed-out than some of the default color profile settings. Take this example of an image of clouds. According to the histogram reading, the Kodak Color Negative is the more ideal choice. But isn’t the more ideal choice the one in which more details are visible? Perhaps this is more of an issue with how the display monitor is calibrated (what you see is not always what you get) but these images require more analysis.

So, to sum it all up, here’s where we are:

- pcdMagic is our tool of choice and in general the Kodak film terms are the best choice in input color profile. ProPhoto RGB is our output color space and TIFF and DNG are our output formats though we are leaning towards TIFF as our Archival Master due to its more device-independent nature. For our Production Master we are deciding between TIFF and JP2 for generating the deliverables.

- We feel confident in the use of “Kodak Color Negative” for the Horblit Collection but more analysis needs to be done for the Harvard Daguerreotypes and the Richard Ree Collection.

- The testing phase is nowhere near complete. We still need to determine what metadata fields we wish to extract and whether these will map well to the current metadata schemas at Harvard (built largely off of the MIX standard). We also need to analyze existing relationships within the repository and make adjustments to the content model as needed. For example, do we need to create a new relationship type of “Migration_Of” for the converted objects? Does the image catalog point to these new objects or are the original PCD objects still the primary source? Will we even keep the TIFF Production Masters and JPEG deliverables knowing that they were created using less-than-deal color mappings? This needs to be solidified before the migration plan is enacted.

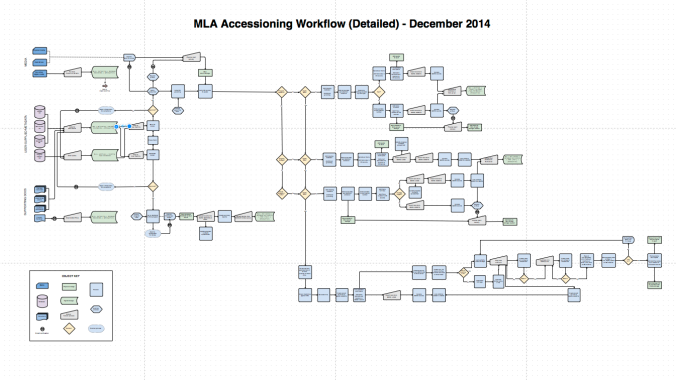

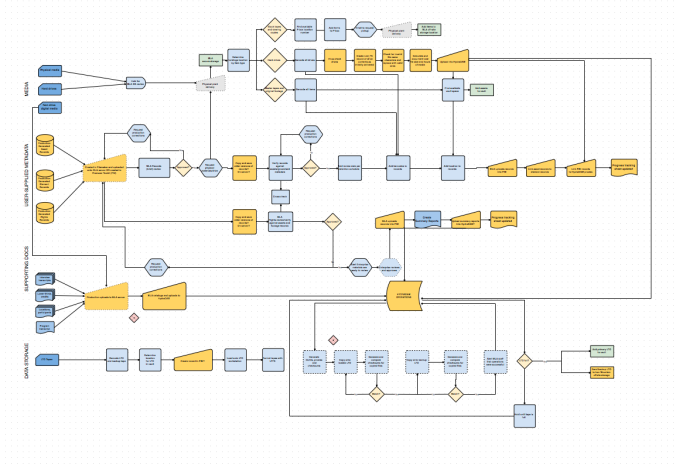

Coming soon will be the diagram workflow which is currently in draft mode. Also, the RealAudio/SMIL project is just kicking off so look for similar analysis updates soon.

-Joey Heinen

Resources Cites:

Burns, P. D., Madden, T. E., Giorgianni, E. J., & Williams, D. (2005). Migration of Photo CD Image Files. IS&T: The Society for Imaging Science and Technology. Retrieved from http://losburns.com/imaging/pbpubs/43Arch05Burns.pdf

pcdmagic. (2014). Retrieved December 19, 2014, from https://sites.google.com/site/pcdmagicsite/

Eastman Kodak Company. (n.d.). Image Pac compression and JPEG compression: What’s the Difference? Retrieved December 08, 2014, from http://www.kodak.com/digitalImaging/samples/imagepacVsJPEG.shtml

Kodak Professional. (2000). Using the ProPhoto RGB Profile in Adobe Photoshop v5.0. Retrieved from http://scarse.sourceforge.net/docs/kodak/ProPhoto-PS.pdf

Jeffrey Erickson – University of Massachusetts at Boston

Jeffrey Erickson – University of Massachusetts at Boston Alice Sara Prael – John F. Kennedy Presidential Library & Museum

Alice Sara Prael – John F. Kennedy Presidential Library & Museum Julie Seifert – Harvard Library

Julie Seifert – Harvard Library Stefanie Ramsay – State Library of Massachusetts

Stefanie Ramsay – State Library of Massachusetts