As I referenced in my post about World Day for Audiovisual Heritage, MIT’s Lewis Music Library is really looking forward to not only preserving the audio content that we are digitizing, but also finally expanding access to it and awareness of it. For this, we need to identify a suitable access platform – no small feat, I have discovered! Large feat, in fact. Size 11s, at least. Since hearing about the myriad of considerations that inform a software evaluation during one of my MSLIS courses, I’ve actually been harboring a desire to be a part of one – and I haven’t been disappointed! So I thought I would share some of our evaluation process so far.

About the time I began my residency, a team was established to evaluate Avalon Media System as our potential dissemination platform. We tended towards looking at Avalon because there hasn’t been a ton of open source competition in the area of audio/audiovisual access, and the music library had previously looked into using Variations – Avalon’s predecessor – before Variations fell by the wayside. Avalon also has classroom integration functionality, which is important for an academic music library. As we began plotting out the evaluation, however, it became clear that it may be a better idea to establish our requirements and then measure a few platform options against them. We aren’t sure yet what other options we might evaluate yet, but we will find out as part of the process.

Our evaluation was initiated by digital curation and preservation on behalf of the Lewis Music Library. It takes a collaborative approach, actively merging organizational and technological perspectives. The team consists of:

• a couple of representatives from IT,

• our metadata archivist,

• a curatorial representative (someone from the music library that can speak to what their users need),

• and a couple of representatives from digital curation and preservation,

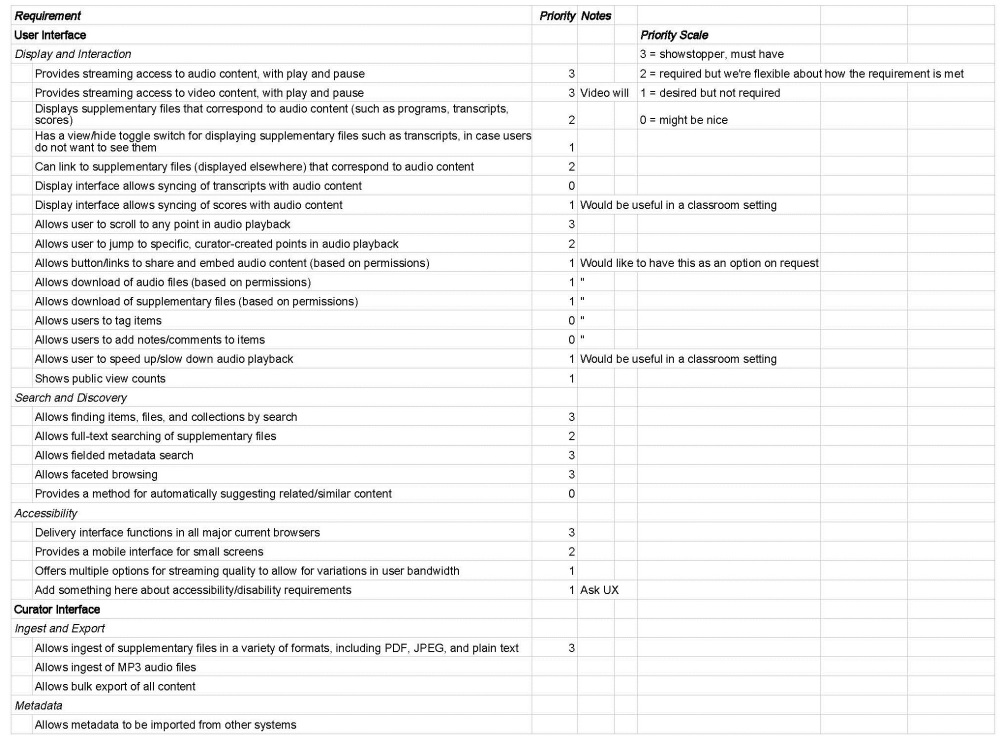

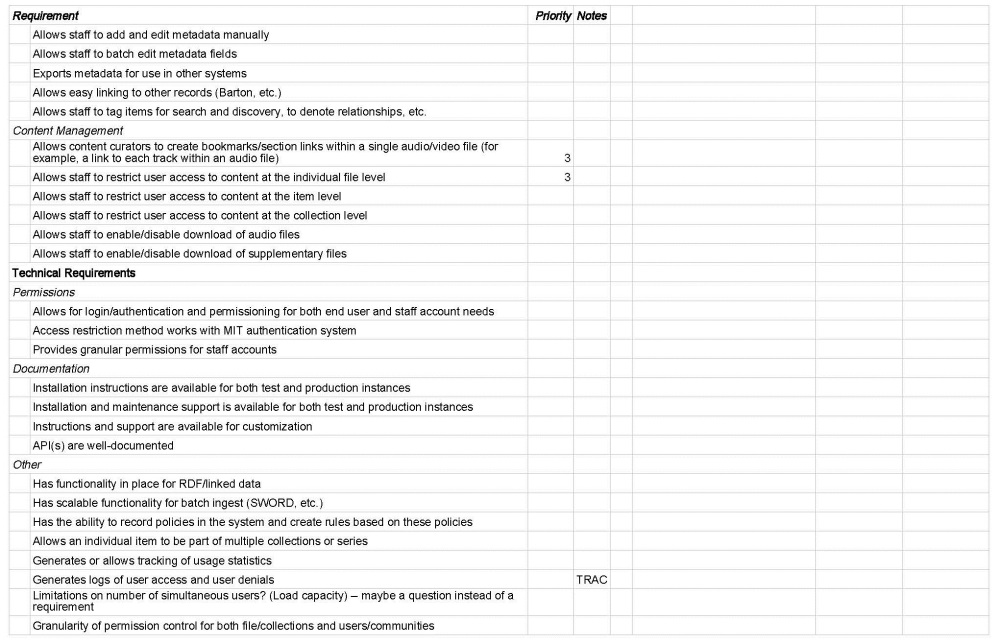

Together we have established most of our organizational and technological requirements, and our Digital Curation Analyst Helen (who was previously MIT’s Fellow for Digital Curation and Preservation) has compiled them into a spreadsheet. They also include any TRAC requirements that are pertinent specifically to access and dissemination, as well as the forethought that we probably want a system that is extensible to video. From here, we are ranking them from 0 (Meh, it might be nice) to 3 (Showstopper, must-have). After we have the requirements ranked, we can evaluate Avalon – and probably two other access systems – against it and figure out which one will work the best for us.

Similar to workflow design, this kind of work gives you real insight into many different facets of the library, archive, and institution itself: the users, rights issues, technical abilities, content varieties, &c. I love the a-ha moments, like: “Ohhhh, of course it would be useful for the curator to have the ability to bookmark sections of the audio! If the item consists of an entire concert, and it’s better for us to retain the context and not split up and separate the different pieces performed, we’ll want to be able to demarcate them.” Or: “Hmmm, I never realized how useful the ability to speed up or slow down audio could be!” I’m excited to keep you all abreast of how it pans out – and especially eager to see the music library finally have a good streaming option for their special collections.

If you Avalon, mow it!

Tricia P